For fun, I’ve been trying out Claude Code on a variety of my personal projects, not expecting much. I am curious to find how hard is too hard for Claude. A fairly straightforward board game proved too much. And then, debugging broken features and adding new language features to a somewhat complex language was a breeze. I have some idea why that may be, and a few suggestions for how to get such good results.

Yes, I’m writing about LLM based AI again. I’ll stop after this for a while. I know I said it would destroy everything but it’s too interesting to quit.

TLDR: For best results, clearly modeling intent with concise code (type definitions especially) is the best way to prompt an AI code assistant.

Too Hard: The Game

I have a little board game project I use as an excuse to learn new languages and techniques. For me, I can’t really get into new languages unless I have something to build. I can implement the language script parser and load and save, plus game board modeling in a new language when I want to learn. One of these days I’ll finish the computer player logic and make it playable.

This time I decided on porting it to Go, a language I’ve only used a bit. I figured I could convert the parser (a hand rolled recursive descent parser and lexer) and add on a little web API to support a game board as a web app. Actually building a proper map is pretty tedious though so AI help is welcome.

So I decided to try out Claude code to see what it could do on a porting task (my first with Claude.) I started up in the existing C++ repo and asked to convert straight to Go. Surprisingly, Claude struggled greatly converting the game definition file parser to Go. This should have been the easy part: The logic needed in Go is basically identical to C++ and memory management is simpler in Go. Lexing is easy and there’s a lot of example Go code out there to learn from. Even if the parsing style is less common (I doubt that,), my code is quite clean and logical, in this case at least) It JUST. COULDN’T. Do. IT.

After several hours I’d given it a tutorial in how the parsing worked and “we” finally had something that sort of worked. But Claude gave up after failing to root out the last bugs, and took a shortcut by building and running my C++ game definition script to JSON converter utility.

The AI gets really focused on the goal and will do almost anything to route around the blockers. What I really had wanted was a Go implementation of the parser, since the script format is what makes customizing the game easy for humans. Oh well. It simply could not grasp the concept of BNF-like grammar description and recursive descent or what the point of the code was at all really. My hypothesis is that while the pattern is very straightforward, the parser and lexer logic spreads out into four files and may not be recognizable for what it is.

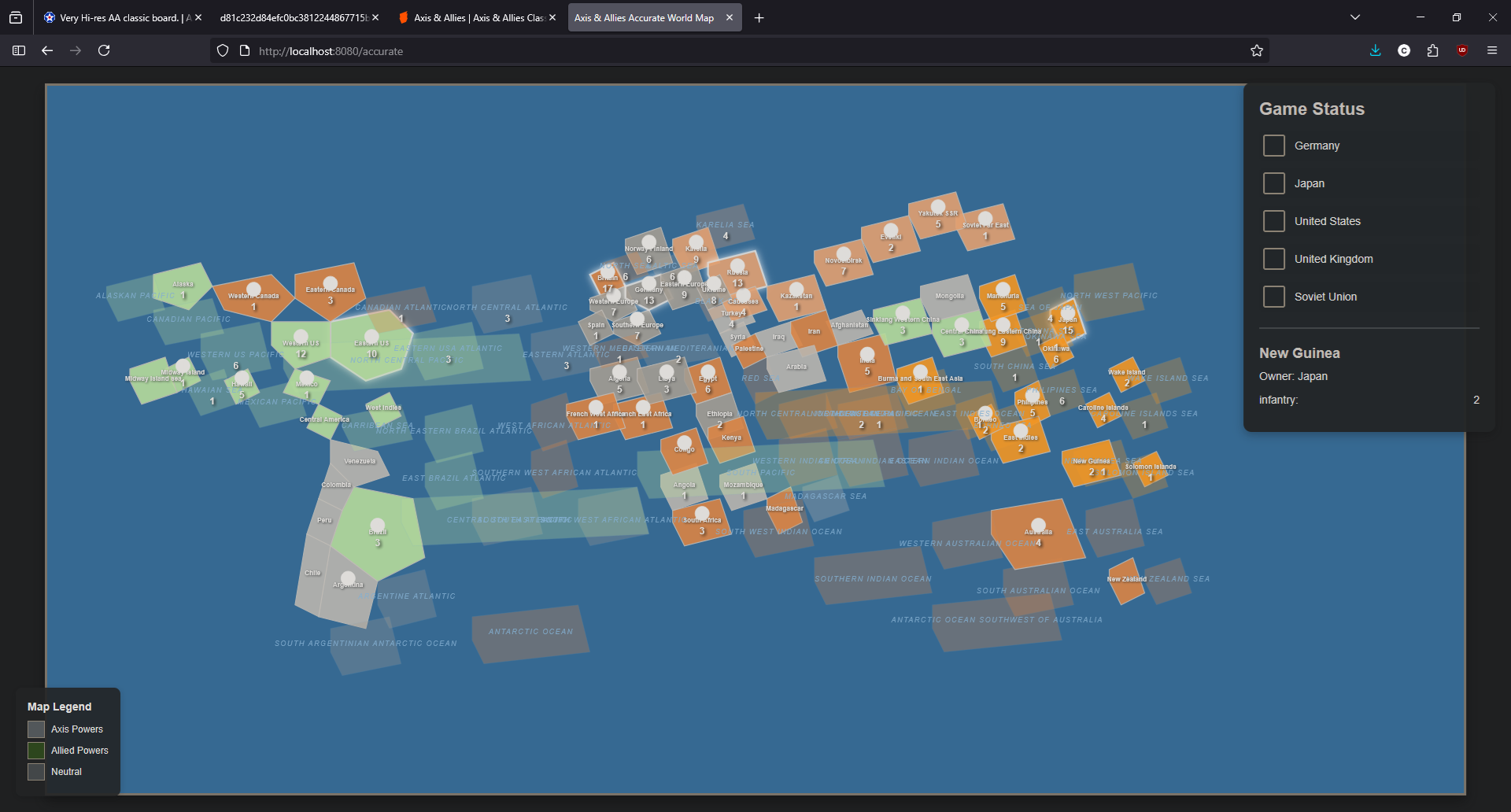

Moving on to building the game UI in Go after giving up on the parser port, I had it set up a web service which went fine as it should. Then I had it build a map page based on the data structures deserialized from the converted JSON. These are real-world countries so it ought to be able to render a cartoon-level map? Not at all. It persisted in trying to use CSS to make blobs with country labels. I asked it to find real maps on the web, but it can’t do that. Finally I took screen shots of it’s miserable attempts and Claude agreed it was making terrible maps. You can reference png or jpeg files or give it URLs to pictures. But after it viewed it’s mistakes, it’s next attempts weren’t much better.

Check this out:

I finally downloaded a labeled world map (heavily colored for game use,) and asked it to place the stats and clickable areas appropriately based on the labels. It utterly failed and no matter how I tried to help, it couldn’t do it.

Both of these tasks are the sort of thing a human could pretty easily do in terms of not getting confused or making fundamental mistakes. The difficulty for humans is that both tasks (the code conversion and the map-making) are very time consuming and error prone. On the map I’d estimate doing a good job is a week of work (seriously, it’s harder than you think not to make mistakes.) The code conversion depends on programming skill but even so a novice could go down the right path, just very slowly. I’d hoped to get a 95% map in an hour or so but no such luck.

Easy: The Programming Language Built in Rust

First, I have to wonder if perhaps Claude Code isn’t as good in Go as I’ve heard. Maybe I’m doing it wrong, I don’t know. Rust is supposed to be harder, but I found the opposite. It makes some sense, as the compiler as really good error messages and there’s often a reference to more online information about errors and warnings that Claude can look up.

My project called Lift-Lang is a small hobby project in Rust to learn about compiling to the Cranelift alternative Rust compiler back-end. Of course, I got bogged down and didn’t reach that point. So AI could maybe help get me there.

The language is a pure expression language with function and type definitions as the only first class entities. These are also expressions. Lift has strong static type checking. Type definitions can nest. The project got to the point where a REPL mostly worked on the implemented language features. I didn’t finish the type checker.

After Claude evaluated with ‘/init’ and read my notes I successfully, in one afternoon:

- Finished the type checker. At first, Claude wanted to leave the hard cases as “unsolved” in the AST but I pushed it by explaining I wanted to add a compiler later and”unsolved” at compile-time is unacceptable.

Getting the type checking functioning proved important to Claude’s ability to write and execute useful tests for everything I added afterward.

Then I added support to the parser and interpreter – with unit and integration tests – for

- Chained ‘else if’ on

ifexpressions (I only had if … {} else {}) - Comments allowed by the parser

- Unary ‘-‘ operator for negative numbers.

- Map literals and List literals. This was kind of a big deal.

A day or two later in an hour:

- Range expressions (1..3) or “1 to 3”.

- User defined type aliases with nested types allowed

- Fix bugs in the REPL

- More powerful type inference

- Lots of integration tests

Finally, I had Claude compose a Mandelbrot rendering program to prove Lift can actually do something real. Claude struggled a bit here. For some reason it didn’t understand the standard starting coordinates on the complex plane, of which examples exist all over the internet. However the basic logic was right and it devised a smart use of recursion: Lift currently has only immutable variables so it can’t iteratively update anything.

Once I spelled out what I thought it was doing wrong and handed it an example naive Mandelbrot program it got it right. To me, this should be so much easier than all the programming language work it did up to that point. I’m surprised it would struggle on this little example. On the other hand, it was writing Lift, a language it has never read before (just helped build!) On the other-other hand, it’s big mistake wasn’t the syntax of Lift but basic logic and math. I hear if you tell it to “think extra carefully” and “your life depends on this” it will perform better. Maybe I’ll give that a shot.

So here’s why I believe Claude Code did so well on my hardest project: I had prepared the ground really well.

I’d written a syntax module in Rust that defined exactly what the parser should emit (it only emitted a subset of that stuff; my ambition was greater than my energy.) And I’d used the Lalr-pop parser generator tool (like Antlr) and partly implemented the language. Very much only partly though. To me, the Lalr-pop grammar file is a kind of tricky way to define a grammar. This is mostly down to me not spending much time on it; it’s perfectly logical. I’m more used to coding a lexer and parser by hand and leaving notation in the comments. For Claude though, it gave an advantage since Claude could examine the generated lexer and parser file and check it after every update to the grammar definition, something that would be tedious for a human developer. There were clear patterns in the Lalr-pop grammar definition file and it could follow those.

Having the syntax module very well filled out allowed Claude to anticipate missing parts of the language at the parsing level, and where the Lift interpreter had missing handling for syntax, the existing parts and the highly detailed Rust type definitions for the language parts allowed it to fill in the blanks. This is something that a human could do too, but with more mistakes.

Here’s a snippet of my syntax.rs, which has the data structures the parser will emit.

Click to expand the full expression type and more

#[derive(Clone, Debug, PartialEq)]

pub struct Param {

pub name: String,

pub data_type: DataType,

pub default: Option<Expr>,

pub index: (usize, usize),

}

#[derive(Clone, Debug, PartialEq)]

pub enum DataType {

Unsolved,

Optional(Box<DataType>),

Range(Box<Expr>),

Str,

Int,

Flt,

Bool,

Map {

key_type: Box<DataType>,

value_type: Box<DataType>,

},

List {

element_type: Box<DataType>,

},

Set(Box<DataType>),

Enum(Vec<String>),

Struct(Vec<Param>),

TypeRef(String), // Reference to a user-defined type

}

#[derive(Clone, Debug, PartialEq)]

pub struct KeywordArg {

pub name: String,

pub value: Expr,

}

#[derive(Clone, Debug, PartialEq)]

pub enum LiteralData {

Int(i64),

Flt(f64),

Str(Rc<str>),

Bool(bool),

}

#[derive(Clone, Debug, PartialEq)]

pub struct Function {

pub params: Vec<Param>,

pub return_type: DataType,

pub body: Box<Expr>,

}

#[derive(Clone, Debug, PartialEq)]

pub enum Expr {

Program {

body: Vec<Expr>,

environment: usize,

},

Block {

body: Vec<Expr>,

environment: usize,

},

Output {

data: Vec<Expr>,

},

// Parsed out from the source file; the structure will resemble the source code

// and is easily scanned and type checked.

Literal(LiteralData),

MapLiteral {

key_type: DataType,

value_type: DataType,

data: Vec<(KeyData, Expr)>,

},

ListLiteral {

data_type: DataType,

data: Vec<Expr>,

},

Range(LiteralData, LiteralData),

// Special case for values accessed and changed during runtime in the interpreter; we

// may wish to change the hashtable for Map or expand how data is physically represented

// in memory during execution.

RuntimeData(LiteralData),

RuntimeList {

data_type: DataType,

data: Vec<Expr>,

},

RuntimeMap {

key_type: DataType,

value_type: DataType,

data: HashMap<KeyData, Expr>,

},

BinaryExpr {

left: Box<Expr>,

op: Operator,

right: Box<Expr>,

},

UnaryExpr {

op: Operator,

expr: Box<Expr>,

},

Assign {

name: String,

value: Box<Expr>,

index: (usize, usize),

},

Variable {

name: String,

index: (usize, usize),

},

Call {

fn_name: String,

index: (usize, usize),

args: Vec<KeywordArg>,

},

DefineFunction {

fn_name: String,

index: (usize, usize),

value: Box<Expr>, // Probably an Expr::Lambda

},

Lambda {

value: Function,

environment: usize,

},

Let {

var_name: String,

index: (usize, usize),

data_type: DataType,

value: Box<Expr>,

},

DefineType {

type_name: String,

definition: DataType,

index: (usize, usize),

},

If {

cond: Box<Expr>,

then: Box<Expr>,

final_else: Box<Expr>,

},

Match {

cond: Box<Expr>,

against: Vec<(Expr, Expr)>,

},

While {

cond: Box<Expr>,

body: Box<Expr>,

},

Return(Box<Expr>),

Unit,

}

I’ve omitted all the implementation code and more boring bits. You can see how this core code creates the axis around which the rest of the project orbits. The parser has to emit instances of these types in a parse tree; the interpreter has to operate on these types in that tree; the type checker can naturally check the tree and it’s clear where to add to the code if a new type is requested.

Lastly, I asked Claude early on to keep updating CLAUDE.md (a file created if you run /init that makes a basic overview of the project.) These updates saved a lot of flailing by Claude. It recorded the fact that semicolons are expression separators, not terminators (Rust vs C for instance.) It learned how to build using Lalr-pop and generate a new parser. It learned how the type checker worked, so avoided writing a bunch of failing code early on.

People often talk online about how they’re successful with coding agents, but you don’t often see specific examples of how they do it. Here’s my CLAUDE.md from the lift-lang project. Keep in mind, I didn’t write this file.

You create an initial CLAUDE.md version with /init. This version is much larger than that initial version. I asked Claude Code to track important changes and directions I gave it on how the project is meant to work and details of the language we’re developing. It did the rest.

CLAUDE.md ---- click to expand ----

# CLAUDE.md

This file provides guidance to Claude Code (claude.ai/code) when working with code in this repository.

## Development Commands

```bash

# Build the project

cargo build

# Build in release mode

cargo build --release

# Run the REPL

cargo run

# Run with a source file

cargo run -- test.lt

# Run all tests

cargo test

# Run a specific test

cargo test test_name

# Run interpreter tests

cargo test test_interpreter

# Run type checking tests

cargo test test_typecheck

# Format code

cargo fmt

# Run linter

cargo clippy

# Check code without building

cargo check

```

## Architecture Overview

Lift is a statically-typed, expression-based programming language that can be both interpreted and compiled. It uses a tree-walking interpreter and LALRPOP parser generator.

### Core Components

1. **Parser Pipeline**

- `src/grammar.lalrpop`: LALRPOP grammar definition that generates the parser

- `build.rs`: Build script that invokes LALRPOP to generate `grammar.rs`

- Parser produces AST nodes defined in `syntax.rs`

2. **AST and Type System** (`src/syntax.rs`)

- Central `Expr` enum defines all expression types

- `DataType` enum represents the type system (Int, Flt, Str, Bool, List, Map, Range, Unsolved)

- `LiteralData` for literal values

- Operators defined with proper precedence

3. **Interpreter** (`src/interpreter.rs`)

- Tree-walking interpreter that evaluates `Expr` nodes

- `interpret()` method on `Expr` is the main entry point

- Handles runtime values and operations

- Variable scoping through environment passing

- Supports logical operators (And, Or)

- Handles Unit expressions

4. **Type Checking** (`src/semantic_analysis.rs`)

- **FULLY IMPLEMENTED** - Type checking for all expression types

- Strict type checking suitable for compiled language

- Type inference from literal values

- `typecheck()` performs semantic analysis

- `determine_type()` for type inference

- No Unsolved types allowed in operations

5. **Symbol Table** (`src/symboltable.rs`)

- Manages variable and function definitions

- Supports nested scopes with hierarchical structure

- Stores compile-time and runtime values separately

- Methods: `get_symbol_type()`, `get_symbol_value()`

- Used during both type checking and interpretation

6. **REPL** (`src/main.rs`)

- Interactive shell with syntax highlighting (rustyline)

- Multi-line input support (use `\` for continuation)

- Command history

- Handles both REPL mode and file execution

- Contains comprehensive test suite

### Language Design Principles

#### Expression-Based

- Everything in Lift is an expression that returns a value

- Blocks return the value of their last expression

- Even control structures like `if` and `while` are expressions

#### Semicolons

- **IMPORTANT**: Semicolons are expression separators, NOT terminators

- The last expression in a block should NOT have a semicolon if you want the block to return that value

- Adding a semicolon after the last expression creates a Unit expression `()`

#### Type System

- Statically typed with type inference

- All types must be resolved at compile time (no Unsolved types in operations)

- Types can be inferred from literal values

- Explicit type annotations required when inference isn't possible

- Numeric types (Int/Flt) can be mixed in operations

### Language Features

#### Data Types

- **Primitive**: Int, Flt (not Float!), Str, Bool, Unit

- **Composite**: List, Map, Range

- **Special**: Unsolved (used during type checking)

#### Variables

```lift

let x = 5; // Type inferred as Int

let y: Int = 10; // Explicit type annotation

let name = 'Alice'; // Strings use single quotes

```

#### Functions

```lift

function add(x: Int, y: Int): Int {

x + y // No semicolon - returns the value

}

// Function calls use named arguments

add(x: 5, y: 3)

```

#### Control Flow

**If/Else/Else If** (Latest feature):

```lift

// Basic if-else

if condition { expr1 } else { expr2 }

// Else if chains

if score >= 90 {

'A'

} else if score >= 80 {

'B'

} else if score >= 70 {

'C'

} else {

'F'

}

// Optional else (returns Unit if condition is false)

if x > 5 { output('Greater') }

```

**While Loops**:

```lift

while x < 10 {

x = x + 1

}

```

#### Operators

- **Arithmetic**: `+`, `-`, `*`, `/`

- **Comparison**: `>`, `<`, `>=`, `<=`, `=`, `<>`

- **Logical**: `and`, `or`, `not`

- **String**: `+` (concatenation)

#### Comments

```lift

// Single-line comment

let x = 42; // Inline comment

/* Multi-line comment

can span multiple lines

and is useful for documentation */

let y = 10 /* inline block comment */ + 5;

```

### Recent Changes

1. **Type Checker Completion**

- Implemented type checking for ALL expression types

- Added strict checking for compiled language support

- Better error messages for type mismatches

- Type inference from literals

2. **Else If Support**

- Grammar updated to support `else if` chains

- Each `else if` is transformed into nested `If` expressions

- Missing `else` clauses default to `Unit`

- Full test coverage added

3. **Negative Number Support**

- Negative numbers are now properly supported as unary operators

- Works for both integers (`-42`) and floats (`-3.14`)

- Handles parser ambiguity correctly (e.g., `1-3` is parsed as `1 - 3`, not `1` and `-3`)

- Negative literals are optimized to true literals in the AST

- Full test coverage added

4. **Comment Support**

- Single-line comments with `//`

- Multi-line comments with `/* */`

- Implemented at lexer level using LALRPOP match patterns

- Comments are properly ignored in all contexts

5. **List Literals**

- Syntax: `[elem1, elem2, ...]`

- Type inference from elements (all elements must have compatible types)

- Empty lists require type annotations (e.g., `let empty: List of Int = []`)

- Converted to `RuntimeList` (Vec-backed) during interpretation

- Supports nested lists and expressions as elements

- Display format: `[1,2,3]`

6. **Map Literals**

- Syntax: `#{key: value, key2: value2, ...}`

- Uses `#{}` to avoid conflict with block syntax `{}`

- Key types: Int, Bool, Str (no Float keys due to HashMap requirements)

- Type inference from first key-value pair

- Empty maps require type annotations

- Converted to `RuntimeMap` (HashMap-backed) during interpretation

- Supports nested structures (maps of lists, etc.)

- Display format: `{1:'one',2:'two'}` (sorted by key)

7. **Comment Support**

- Single-line comments with `//` syntax

- Multi-line comments with `/* */` syntax

- Comments can appear anywhere in the code

- Properly handles comment-like text in strings

- Works with inline comments in expressions

- Full test coverage added

### Test Programs

Test programs are located in the `tests/` directory:

- `tests/test_else_if.lt` - Demonstrates else if chains

- `tests/test_if_no_else.lt` - Shows optional else behavior

- `tests/test_typechecker.lt` - Type checking examples

- `tests/test_type_error.lt` - Type error example

- `tests/test_needs_annotation.lt` - Shows when type annotations are needed

- `tests/type_checking_examples.lt` - Various type checking scenarios

- `tests/test_negative_numbers.lt` - Tests negative number literals and operations

- `tests/test_comments.lt` - Tests comment support with various scenarios

### Known Limitations

1. List indexing not yet implemented (e.g., `mylist[0]`)

2. Map key access not yet implemented (e.g., `mymap['key']`)

3. Match expressions not yet implemented

4. No module/import system

5. Limited standard library (only `output` function)

6. No type aliases or custom types

7. Assignment (`x = value`) not yet implemented

8. Return statements exist but may need refinement

9. No automatic Int to Flt conversion - types must match exactly in operations

10. While loops don't return values (use recursion for computed loops)

11. `output()` adds quotes around strings and spaces between items

12. No methods for adding/removing items from lists or maps

13. Empty list/map literals require explicit type annotations

### Common Development Tasks

When modifying the parser:

1. Edit `src/grammar.lalrpop`

2. Run `cargo build` to regenerate the parser

3. Check for LALRPOP errors in the build output

4. Test with `cargo test`

When adding new expression types:

1. Add variant to `Expr` enum in `syntax.rs`

2. Add parsing rule in `grammar.lalrpop`

3. Add evaluation logic in `interpreter.rs` (interpret method)

4. Add type checking logic in `semantic_analysis.rs` (typecheck function)

5. Add symbol handling in `add_symbols` if needed

6. Add tests in `main.rs`

### Testing Strategy

- Unit tests for individual components (parsing, type checking)

- Integration tests for full programs

- Test both successful execution and error cases

- Use descriptive test names like `test_interpreter_else_if`

- **Integration tests for .lt files**: `cargo test` now automatically runs all `.lt` files in the `tests/` directory

- Tests verify both successful execution and expected errors

- Helper function `run_lift_file()` handles parsing, type checking, and interpretation

- Test functions named `test_lt_file_*` for each .lt file

### Implementation Challenges and Solutions

#### 1. Negative Number Support

**Challenge**: Parser ambiguity - `1-3` was tokenized as two separate tokens `1` and `-3`

**Solution**:

- Removed negative sign from lexer number regex

- Implemented unary minus operator at parser level

- Optimized negative literals directly in the AST for better performance

#### 2. Grammar Conflicts for Map Literals

**Challenge**: `{` token already used for blocks, causing reduce/reduce conflicts

**Solution**:

- Adopted `#{}` syntax for map literals to distinguish from blocks

- Clear and unambiguous parsing without grammar conflicts

#### 3. Empty Collection Type Inference

**Challenge**: Cannot infer types for empty lists `[]` or maps `#{}`

**Solution**:

- Require explicit type annotations for empty collections

- Error messages guide users to add type annotations

- Consistent with statically-typed language principles

#### 4. MapLiteral in determine_type

**Challenge**: Test failures due to missing MapLiteral case in type inference

**Solution**:

- Added MapLiteral handling in determine_type function

- Infers key type from KeyData variant, value type from first element

#### 5. Collection Symbol Processing

**Challenge**: Borrowing issues when processing nested collections in add_symbols

**Solution**:

- Used `iter_mut()` for proper mutable iteration

- Maintained ownership while traversing nested structures

Keep in mind, lift-lang is mostly a toy, with ambitions. Would I expect to effectively use Claude Code on a real production compiler? No way. I’d give it a shot but the sheer amount of code would make it challenging.

Getting More out of AI Coding Agents

Beyond learning specific commands in Claude Code, I think the best thing you can do to improve your AI coding sessions is spend time in your project modeling core concepts. Write out the high-level representations of things you’ve thought deeply about in your target programming language. Include comments with your ideas of where it could go next, even if you can’t quite express it in code yet. Just asking Claude to make all your business logic or objects will work to a point, but it will be non-specific. Moreover, writing that core code forces you to think, and in thinking you’ll get more specific and get better ideas. If this foundation is solid, Claude can build on it successfully.