After you have done the obvious algorithm commplexity analysis on your C++ application in code you’ve written, what’s next on the list for ways to optimize your application? How about looking for more efficient implementations of standard data structures in libraries?

Optimizing C++ Applications With Better Hash Tables

The IPUMS Data Conversion Program (DCP), written with mostly standard modern C++, makes heavy use of hash tables where there are no better alternatives. Until now we’ve stuck with the STL “unordered_map”, for standard hash table functionality, and “map” where we need ordered keys.

I knew performance could be better if the standard “unordered_map<>” STL containers were replaced with something faster – not that I was certain I could find a significantly better implementation.

We have many hash tables holding “translation tables” (typically fairly small tables mapping many coding schemes to one standardized one used by IPUMS.) These get read in at start-up, and are applied on every record of data processed.

Additionally we have a smaller number of special large look-ups to – among other purposes – substitute geography codes for satellite data, also read at start-up time. On every record DCP takes a standardized input and looks up the correct value for the current dataset. For instance, a look-up table might contain location data common to all datasets from a country, but every dataset has different crop yield values at those locations for every year.

Notice that these uses don’t involve a lot of inserts throughout the run of the program, just look-ups? That got me thinking about compile-time maps, but for logistical reasons these won’t easily work for us. (If it were extremely critical I could work something out and it’d be even faster than what I did here.) Nevertheless I wondered if our use case could be served better with an alternative to the standard “unordered_map.”

Replacing the standard STL “unordered_map” with a different hash table implementation might not be high on your list as a place to start looking for chances to optimize, (unless you’re a game developer.) But consider:

- Changing out the STL “unordered_map” for an alternative (assuming it uses the same interface as “unordered_map”) is quite easy

- Some parts of your code that use hash tables really can’t be done any other way without a lot of effort to refactor, so you need some sort of hash table

- The STL “unordered_map” has good general purpose performance but your code may lean heavily on a particular use case where “unordered_map” doesn’t do well.

Searching brought me to I Wrote the Fastest Hash Table. After a bit of experimentation I decided it was the best candidate so far and I switched out the standard hash table everywhere it was easy and everywhere “unordered_map” could plausibly create a bottleneck. I changed the code so that the new hash table class is aliased with a type name so that I can quickly replace it with a different implementation should I discover a better one.

This one is fastest for lookups, and also fast – but not the fastest – for inserts and deletes.

The largest draw-back of the new hash table implementation is that it takes up significantly more memory. Fortunately that doesn’t matter in this case; even fully loaded with DCP processes our servers still have plenty of memory to spare. But if lookup tables were to grow ten times larger memory use could become a limiting factor and we’d need to consider other implementations.

Replace Standard Hash Tables: Results

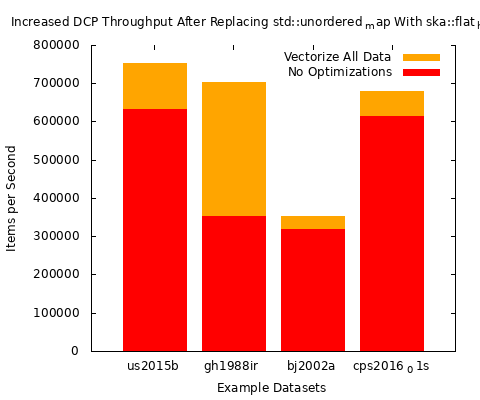

Here are four representative datasets, one each from four IPUMS data products. The numbers are “data items” processed per second in a single thread on one of our servers.

dataset Standard Better Hashtables

us2015b 632923 753480

gh1988ir 352008 704218

bj2002a 319014 351911

cps2016_01s 613245 680882

Data items processed per second. A higher number is better.

Not Earth-shattering, but worthwhile given that we could make the change with a simple find-and-replace and a few tweaks here and there. TheDHS dataset gained the most, which makes sense, because DHS makes heavy use of lookups compared to any other product.

For some context, DHS as of this writing has about twenty lookup tables with 75,000 entries each, and they are consulted on every record. All datasets from all IPUMS products make use of translation tables, but many are very small and lookups from them don’t constitute a bottleneck.

Here’s a nice visualization of the above data: